There are many more. As i am just getting my feet wet with Spring integration this is all i have covered up to now.

Note that i have used Spring 3.0.3 for this project. If you are using Spring 2.0 the required jars will be less. But as i used Spring Integration 2.0 i wanted to go with Spring 3.0.

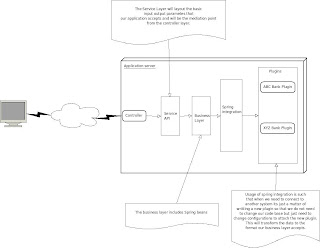

I will not go into detail on this digram as the diagram it self is self explanatory. So now lets get our hands dirty with some code;

First off i will start with the service layer;

package com.paymentgateway.services;

import com.paymentgateway.dto.PaymentRequestDTO;

import com.paymentgateway.dto.PaymentResponseDTO;

/**

* The service interface is what the client from our application interacts with

* the client is not aware of spring integration being used.

*

* @author dinuka

*/

public interface PaymentService {

public PaymentResponseDTO makePayment(PaymentRequestDTO paymentRequestDTO);

}

package com.paymentgateway.services;

import java.util.HashMap;

import java.util.Map;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.integration.message.GenericMessage;

import org.springframework.stereotype.Component;

import com.paymentgateway.dto.PaymentRequestDTO;

import com.paymentgateway.dto.PaymentResponseDTO;

import com.paymentgateway.dto.PaymentStatusCode;

import com.paymentgateway.dto.SystemActions;

import com.paymentgateway.gateway.CentralPaymentGateway;

@Component("paymentService")

public class PaymentServiceImpl implements PaymentService {

@Autowired

private CentralPaymentGateway gateway;

@Override

public PaymentResponseDTO makePayment(PaymentRequestDTO paymentRequestDTO) {

/**

* Here you can do any validation checks for null values if you need

* and throw any relevant exception as needed. For simplicity purposes

* i have not done so here.

*/

/**

* In the header we specify the banking system this message needs to be routed to<br>

* Then in the

*/

Map headerMap = new HashMap();

headerMap.put("BANKING_SYSTEM", paymentRequestDTO.getBankingSystem());

headerMap.put("ACTION", SystemActions.PAYMENT.toString());

GenericMessage<PaymentRequestDTO> paymentRequestMsg = new GenericMessage<PaymentRequestDTO>(paymentRequestDTO,

headerMap);

PaymentResponseDTO paymentResponseDTO = gateway.makePayment(paymentRequestMsg);

if (paymentResponseDTO.getStatusCode() == PaymentStatusCode.FAILURE) {

/**

* Throw relevant exception

*/

}

return paymentResponseDTO;

}

}

And the DTOs used are as follows;

package com.paymentgateway.dto;

import java.io.Serializable;

/**

* This DTO holds the data that needs to be passed to the

* relevant plugin in order to make a payment

*

* @author dinuka

*/

public class PaymentRequestDTO implements Serializable {

/**

*

*/

private static final long serialVersionUID = 582470760696219645L;

/**

* The account number of the customer

*/

private String accountNumber;

/**

* The amount needed to be reduced

*/

private Double deductAmount;

/**

* The First Name of the customer

*/

private String firstName;

/**

* The Last Name of the customer

*/

private String lastName;

/**

* This should ideally be moved to a CommonDTO as this will be reused by all

* subsequent DTOs. Default banking system is "abc". The client needs to set

* which banking system is needed to connect to.

*/

private String bankingSystem = "abc";

public String getAccountNumber() {

return accountNumber;

}

public void setAccountNumber(String accountNumber) {

this.accountNumber = accountNumber;

}

public Double getDeductAmount() {

return deductAmount;

}

public void setDeductAmount(Double deductAmount) {

this.deductAmount = deductAmount;

}

public String getFirstName() {

return firstName;

}

public void setFirstName(String firstName) {

this.firstName = firstName;

}

public String getLastName() {

return lastName;

}

public void setLastName(String lastName) {

this.lastName = lastName;

}

public String getBankingSystem() {

return bankingSystem;

}

public void setBankingSystem(String bankingSystem) {

this.bankingSystem = bankingSystem;

}

@Override

public String toString() {

return "PaymentRequestDTO [accountNumber=" + accountNumber + ", deductAmount=" + deductAmount + ", firstName="

+ firstName + ", lastName=" + lastName + "]";

}

}

package com.paymentgateway.dto;

import java.io.Serializable;

/**

* This is the default payment response DTO that every plugin

* must return back to the system

*

* @author dinuka

*/

public class PaymentResponseDTO implements Serializable {

/**

*

*/

private static final long serialVersionUID = 2773607380706313950L;

/**

* The account number of the customer

*/

private String accountNumber;

/**

* The first name of the customer

*/

private String firstName;

/**

* The last name of the customer

*/

private String lastName;

/**

* The remaining balance in the account of the customer

*/

private Double availableBalance;

/**

* The balance reduced from the customer account

*/

private Double reducedBalance;

/**

* The status code indicating whether the transaction was a success or not

*/

private PaymentStatusCode statusCode = PaymentStatusCode.SUCCESS;

/**

* The transaction id assigned to the relevant transaction

*/

private Long transationId;

public String getAccountNumber() {

return accountNumber;

}

public void setAccountNumber(String accountNumber) {

this.accountNumber = accountNumber;

}

public String getFirstName() {

return firstName;

}

public void setFirstName(String firstName) {

this.firstName = firstName;

}

public String getLastName() {

return lastName;

}

public void setLastName(String lastName) {

this.lastName = lastName;

}

public Double getAvailableBalance() {

return availableBalance;

}

public void setAvailableBalance(Double availableBalance) {

this.availableBalance = availableBalance;

}

public Double getReducedBalance() {

return reducedBalance;

}

public void setReducedBalance(Double reducedBalance) {

this.reducedBalance = reducedBalance;

}

public PaymentStatusCode getStatusCode() {

return statusCode;

}

public void setStatusCode(PaymentStatusCode statusCode) {

this.statusCode = statusCode;

}

public Long getTransationId() {

return transationId;

}

public void setTransationId(Long transationId) {

this.transationId = transationId;

}

@Override

public String toString() {

return "PaymentResponseDTO [accountNumber=" + accountNumber + ", firstName=" + firstName + ", lastName="

+ lastName + ", availableBalance=" + availableBalance + ", reducedBalance=" + reducedBalance

+ ", statusCode=" + statusCode + ", transationId=" + transationId + "]";

}

}

package com.paymentgateway.dto;

/**

* The status codes returned from each plugin indicating

* if the transaction was a success or not

*

* @author dinuka

*/

public enum PaymentStatusCode {

SUCCESS, FAILURE

}

package com.paymentgateway.dto;

import com.paymentgateway.util.PaymentRouter;

/**

* This enum defines the system wide actions

* We use this name in our {@link PaymentRouter}

* to decide which channel to route the message

*

* @author dinuka

*/

public enum SystemActions {

PAYMENT {

@Override

public String toString() {

return "Payment";

}

}

}

Those are the DTOs i have used. Moving on, as the second diagram above specified we have defined a Central Gateway & A Router. So lets see how we have implemented those using spring integration;

package com.paymentgateway.gateway;

import org.springframework.integration.message.GenericMessage;

import com.paymentgateway.dto.PaymentRequestDTO;

import com.paymentgateway.dto.PaymentResponseDTO;

/**

* This interface represents the common gateway which

* will be used by Spring Integration to wire up the plugins

* and also will be the central and first point of contact

* by any client calling our business layer

*

* @author dinuka

*/

public interface CentralPaymentGateway {

/**

* This method takes a parameter type of {@link GenericMessage} which wraps<br>

* an instance of {@link PaymentRequestDTO}. Usage of sending an instance of<br>

* Generic Message is so that we can add header values which can indicate<br>

* which banking system to call to

*

* @param paymentRequestDTO

* @return

*/

public PaymentResponseDTO makePayment(GenericMessage<PaymentRequestDTO> paymentRequestDTO);

}

Note that the gateway is just an interface defining our input parameters. We have used the GenericMessage defined by Spring integration. If you go back to the service layer implementation you can see that we have populated an instance of GenericMessage with the relevant DTO which is passed onto the gateway. The gateway here acts as a mediation layer.

Moving on with the Router implementation;

package com.paymentgateway.util;

import org.springframework.integration.Message;

/**

* This is the base Router for All payment related functions

* We route the message based on the banking system and the action

* which comes in the header of the message. Ofcourse we can enhance this

* to put the message on an error queue if the {@link Message} does not have the

* relevant header values.

*

* @author dinuka

*/

public class PaymentRouter {

public String resolveBankChannel(Message message) {

return (String) message.getHeaders().get("BANKING_SYSTEM") + (String) message.getHeaders().get("ACTION")

+ "Channel";

}

}

Again if you go back to the PaymentServiceImpl class you can see we set the two headers BANKING_SYSTEM and ACTION. The router decides which channel this message should go on. You can see this in the next section when we wire up all this together.

The Spring configurations are as follows;

First off i present to you the main config file named context-config.xml. This mainly injects the service layer beans.

<?xml version="1.0" encoding="UTF-8"?>

<beans xmlns="http://www.springframework.org/schema/beans"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xmlns:context="http://www.springframework.org/schema/context"

xsi:schemaLocation="http://www.springframework.org/schema/beans http://www.springframework.org/schema/beans/spring-beans-3.0.xsd

http://www.springframework.org/schema/context http://www.springframework.org/schema/context/spring-context-3.0.xsd">

<context:component-scan base-package="com.paymentgateway.services" />

<context:annotation-config />

</beans>

Next we look at the core configuration where we wire up the Spring Integration related components;

spring-integration-config.xml

<?xml version="1.0" encoding="UTF-8"?>

<beans xmlns="http://www.springframework.org/schema/beans"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns:int="http://www.springframework.org/schema/integration"

xsi:schemaLocation="http://www.springframework.org/schema/beans http://www.springframework.org/schema/beans/spring-beans-3.0.xsd

http://www.springframework.org/schema/integration http://www.springframework.org/schema/integration/spring-integration-2.0.xsd">

<!-- The generic input channel which would be used to pass through all messages

coming into the Central Gateway -->

<int:channel id="inputChannel"></int:channel>

<!-- Here we wire up the Central Gateway which is the central point of access

from our service layer -->

<int:gateway id="gateway" default-request-channel="inputChannel"

service-interface="com.paymentgateway.gateway.CentralPaymentGateway"

default-reply-channel="outputChannel"></int:gateway>

<!-- This is the generic Output channel which will be used by to send the

output from any plugin. -->

<int:channel id="outputChannel"></int:channel>

<!-- The router is the one who decides which channel to send the message

passed in from input channel into. The client should send the name of the

Banking system where by 'SearchChannel' keyword is appended by the defaultRouter

bean. -->

<int:router id="centralRouter" ref="defaultRouter" method="resolveBankChannel"

input-channel="inputChannel"></int:router>

<bean id="defaultRouter" name="defaultRouter"

class="com.paymentgateway.util.PaymentRouter" />

</beans>

That is the core configuration which wires up the Gateway, Router and defines the Channels required by the application. Next off lets go into our plugin( of many plugins to come) the ABC Bank Plugin.

First we define the Base plugin interface which all plugin developers should adhere to;

package com.paymentgateway.plugins;

import com.paymentgateway.dto.PaymentRequestDTO;

import com.paymentgateway.dto.PaymentResponseDTO;

/**

* This is the base plugin interface. All plugin developers should adhere to<br>

* this interface when they write new plugins connecting to different banking<br>

* systems.

*

* @author dinuka

*/

public interface BasePlugin {

public PaymentResponseDTO makePayment(PaymentRequestDTO paymentRequestDTO);

}

And the implementation of this interface is as follows;

package com.paymentgateway.plugins;

import com.paymentgateway.dto.PaymentRequestDTO;

import com.paymentgateway.dto.PaymentResponseDTO;

import com.paymentgateway.dto.PaymentStatusCode;

/**

* This is the plugin used to connect to the ABC banking system

* in order to do the payment transaction.

*

* @author dinuka

*/

public class ABCBankPlugin implements BasePlugin {

@Override

/**

* Right now we just return a mock value. But when the true implementation

* comes you will deal with any connection rellated information

* at this point.

*/

public PaymentResponseDTO makePayment(PaymentRequestDTO paymentRequestDTO) {

PaymentResponseDTO paymentResponseDTO = new PaymentResponseDTO();

paymentResponseDTO.setAccountNumber("abc123");

paymentResponseDTO.setAvailableBalance(10000d);

paymentResponseDTO.setFirstName("Dinuka");

paymentResponseDTO.setLastName("Arseculeratne");

paymentResponseDTO.setReducedBalance(500d);

paymentResponseDTO.setStatusCode(PaymentStatusCode.SUCCESS);

paymentResponseDTO.setTransationId(1233424234l);

return paymentResponseDTO;

}

}

As this is just a mock implementation i have just returned the response DTO with values filled. Now that we have developed our plugin lets wire it up;

abc_bank_plugin-config.xml

<?xml version="1.0" encoding="UTF-8"?>

<beans xmlns="http://www.springframework.org/schema/beans"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns:int="http://www.springframework.org/schema/integration"

xsi:schemaLocation="http://www.springframework.org/schema/beans http://www.springframework.org/schema/beans/spring-beans-3.0.xsd

http://www.springframework.org/schema/integration http://www.springframework.org/schema/integration/spring-integration-2.0.xsd">

<!-- Start of ABC Banking System Plugin Injection -->

<!-- This is the payment channel used for the ABC banking system -->

<int:channel id="abcPaymentChannel"></int:channel>

<!-- Wire up the ABC Banking plugin -->

<bean id="abcBakingSysPlugin" name="abcBakingSysPlugin"

class="com.paymentgateway.plugins.ABCBankPlugin" />

<!-- This service activator is used to handle the payment response from

ABC banking system -->

<int:service-activator input-channel="abcPaymentChannel"

ref="abcBakingSysPlugin" method="makePayment" output-channel="outputChannel"></int:service-activator>

<!-- End of ABC Banking System Plugin Injection -->

</beans>

And lastly i present a test class just so that you can run the solution given above;

package com.paymentgateway.test;

import org.springframework.context.ApplicationContext;

import org.springframework.context.support.ClassPathXmlApplicationContext;

import com.paymentgateway.dto.PaymentRequestDTO;

import com.paymentgateway.dto.PaymentResponseDTO;

import com.paymentgateway.services.PaymentService;

/**

* This is a test class showing how it all comes together

*

* @author dinuka

*/

public class TestBankingApp {

public static void main(String[] args) {

ApplicationContext context = new ClassPathXmlApplicationContext("context-config.xml",

"spring-integration-config.xml","abc_bank_plugin-config.xml");

PaymentService paymentService = (PaymentService) context.getBean("paymentService");

PaymentRequestDTO paymentRequestDTO = new PaymentRequestDTO();

PaymentResponseDTO paymentResponseDTO = paymentService.makePayment(paymentRequestDTO);

/**

* We just print out the resulting DTO returned from the plugin<br>

* as this is just a tutorial

*/

System.out.println(paymentResponseDTO);

}

}

Thats it. Your done with your plugin architecture. If you ever do develop another plugin all you have to do is implement the BasePlugin Interface and as well as give the wiring up spring file. The following diagram explains the flow in which the message travels which will give you an even clearer picture of what we have accomplished;

Future Enhancements:

- Implement a transformer pattern which will do the conversion of DTOs to application specific DTOs.

- Introduce an error channel where any error populated will be put in to.

Thats it guys. Your comments and suggestions are most welcome.

References:

[1] http://static.springsource.org/spring-integration/docs/2.0.0.RELEASE/reference/htmlsingle/